Every time you respond to a question, make a quick judgment, or react to someone’s opinion, your brain is taking shortcuts. Not because you’re lazy, but because it’s wired to. These shortcuts are called cognitive biases-automatic mental patterns that filter everything you see, hear, and believe. And they’re not just harmless quirks. They shape your words, your choices, and even how you interpret facts-often without you realizing it.

Why Your Brain Loves Belief-Consistent Answers

Your brain doesn’t want to be wrong. It wants to feel right. That’s why, when you hear something that matches what you already believe, your brain lights up. Neuroscientists have seen this in fMRI scans: when people encounter information that confirms their views, the ventromedial prefrontal cortex-part of the brain tied to reward and emotion-gets activated. Meanwhile, the dorsolateral prefrontal cortex, responsible for logic and critical thinking, gets quieter. This isn’t just about politics. It happens when you’re choosing a product, judging a coworker, or deciding if a doctor’s advice makes sense. If the message fits your worldview, you accept it faster. If it doesn’t? You dismiss it-even if it’s backed by data. Take confirmation bias, the most powerful of all cognitive biases. In a 2021 meta-analysis of over 1,200 studies, researchers found it had the strongest effect on how people respond to new information. People didn’t just prefer confirming evidence-they actively ignored, distorted, or discredited anything that challenged their beliefs. In one study, when Reddit users were shown facts contradicting their political views, their stress levels spiked by 63%. They didn’t just disagree-they felt threatened.How Beliefs Turn Generic Responses Into Errors

Think about how often you say things like:- "They’re just lazy."

- "I knew that would happen."

- "Everyone thinks this way."

When Beliefs Cost Real Money-And Lives

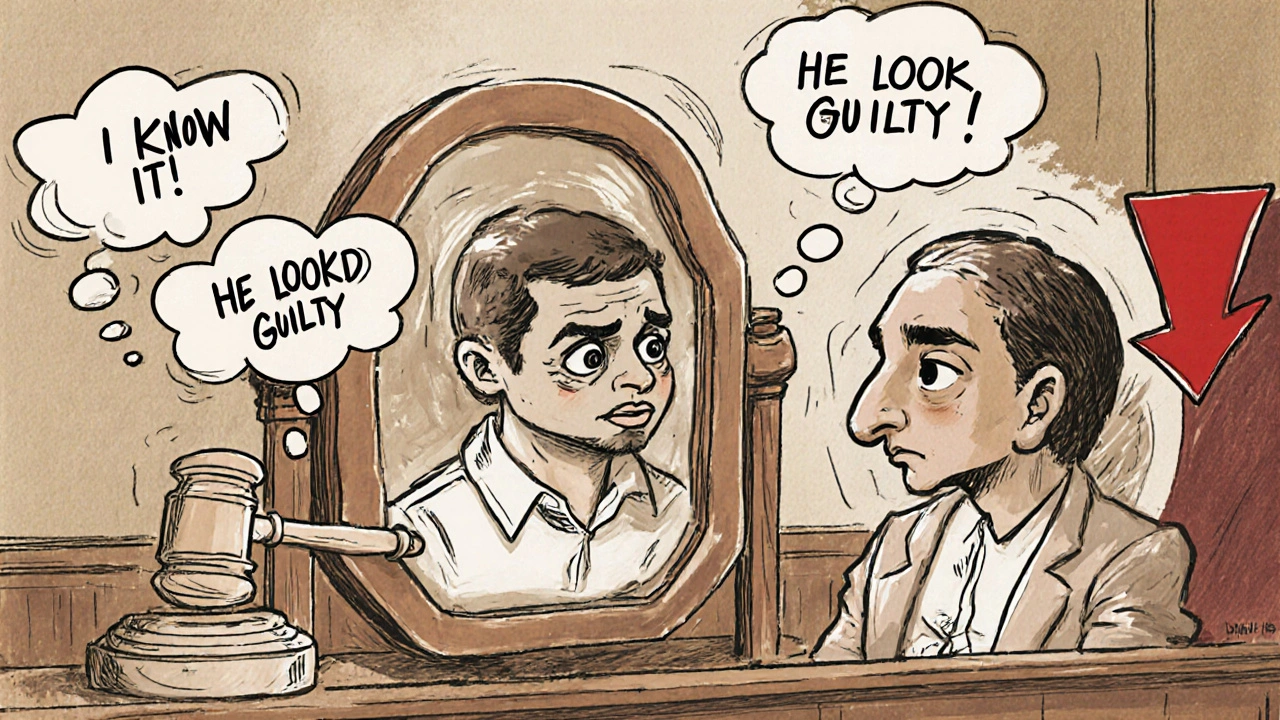

These biases aren’t just annoying. They have real consequences. In healthcare, diagnostic errors caused by cognitive bias contribute to 12-15% of adverse events, according to Johns Hopkins Medicine. A doctor who believes a patient is "just anxious" might miss a heart attack. A nurse who assumes an elderly patient is "just confused" might overlook signs of infection. In law, confirmation bias has helped send innocent people to prison. The Innocence Project found that in 69% of DNA-exonerated wrongful convictions, eyewitnesses misidentified suspects-because they expected the suspect to look a certain way. Their belief shaped their memory. And in finance? Overconfidence and optimism bias lead people to believe they’re smarter than the market. A 2023 Journal of Finance study tracked 50,000 retail investors. Those who underestimated their risk of loss by 25% or more earned 4.7 percentage points less per year than those who were more realistic. That’s not bad luck. That’s bias in action.

Why You Can’t Just "Be More Rational"

You’ve probably heard: "Just think before you react." But Nobel laureate Daniel Kahneman showed that’s not how the brain works. He divided thinking into two systems: System 1 (fast, automatic, emotional) and System 2 (slow, logical, effortful). Most of your responses come from System 1. It’s efficient. It lets you drive, chat, and scroll without overthinking. But it’s also easily fooled. System 2 is lazy. It doesn’t jump in unless it’s forced to. That’s why simply knowing about biases doesn’t fix them. A 2002 Princeton study found that 86% of people believed they were less biased than their peers. That’s called the bias blind spot-and it’s universal. The more you think you’re rational, the more likely you are to be ruled by unconscious beliefs.How to Break the Pattern-Without Exhausting Yourself

You don’t need to become a philosopher. You need practical tools. One of the most effective? The "consider-the-opposite" technique. Before you respond to something that triggers you, ask: "What if I’m wrong? What evidence would prove me wrong?" University of Chicago researchers found this cuts confirmation bias by nearly 38%. In medical settings, hospitals now require doctors to list three alternative diagnoses before settling on one. That simple rule reduced diagnostic errors by 28%. Another tactic: slow down your responses. When someone says something that rubs you the wrong way, pause for five seconds. Breathe. Don’t reply immediately. That tiny delay gives System 2 a chance to wake up. Organizations are starting to use tech to help. IBM’s Watson OpenScale monitors AI decision-making and flags belief-driven patterns in real time, reducing bias in automated responses by over 34%.

It’s Not About Being Perfect-It’s About Being Aware

You won’t eliminate cognitive biases. No one can. They’re built into how humans evolved to survive. But you can learn to spot them in your own thinking. The goal isn’t to stop having beliefs. It’s to stop letting them control your responses without you knowing. When you catch yourself saying, "Everyone knows that," pause. Ask: "Who exactly? And how do I know?" When you blame someone for a mistake, ask: "Would I say the same if it were me?" When you feel certain about something, ask: "What’s the one thing that could prove me wrong?" These aren’t philosophical exercises. They’re behavioral nudges-small shifts that lead to smarter, fairer, and more accurate responses. The world doesn’t need more certainty. It needs more curiosity.What’s Changing Now-And What’s Coming

Cognitive bias isn’t just a psychology topic anymore. It’s becoming part of policy, tech, and education. In 2024, the FDA approved the first digital therapy designed to reduce cognitive bias. Developed by Pear Therapeutics, it uses guided exercises to help users recognize and reframe belief-driven thinking. The European Union’s AI Act, effective February 2025, requires all high-risk AI systems to be tested for cognitive bias. Companies that fail face fines up to 6% of their global revenue. In the U.S., 28 states now require high schools to teach cognitive bias literacy. Students learn how their own thinking can distort facts-not to make them skeptical of everything, but to help them think more clearly. Even Google has jumped in. Its "Bias Scanner" API analyzes over 2.4 billion queries a month, detecting language patterns that signal belief-driven responses with 87% accuracy. The message is clear: understanding how beliefs shape responses isn’t optional anymore. It’s essential.What’s the most common cognitive bias in everyday conversations?

Confirmation bias is the most common. It makes you notice, remember, and repeat information that supports what you already believe, while ignoring or dismissing anything that doesn’t. This happens in casual chats, social media comments, and even work meetings-often without you realizing it.

Can you train yourself to reduce cognitive biases?

Yes, but not by willpower alone. Research shows structured techniques like "consider-the-opposite," real-time feedback tools, and regular reflection reduce bias by 30-40% over 6-8 weeks. Apps and digital tools can help, but lasting change requires consistent practice-not one-off exercises.

Do cultural differences affect cognitive biases?

Absolutely. Self-serving bias-blaming failures on outside factors but taking credit for successes-is much stronger in individualistic cultures like the U.S. and Australia than in collectivist cultures like Japan or South Korea. In-group/out-group bias also varies, with stronger emotional reactions to out-group members in societies with higher social division.

Why do people think they’re less biased than others?

This is called the bias blind spot. A 2002 Princeton study found 86% of people believed they were less biased than their peers. The reason? We can see our own thoughts clearly, but we only see others’ actions. So we assume our decisions are rational, while we judge others by their behavior-which looks biased.

Are cognitive biases the same as stereotypes?

Stereotypes are one type of cognitive bias-they’re simplified beliefs about groups of people. But cognitive biases are broader. They include things like hindsight bias, anchoring, and overconfidence, which affect how we interpret data, make decisions, and remember events-not just how we judge others.

Can AI help reduce human cognitive bias?

Yes, but only if designed carefully. AI can flag biased language, suggest alternative explanations, or remind users to consider opposing views. Tools like IBM’s Watson OpenScale have reduced bias in automated decisions by over 34%. But AI can also amplify human bias if trained on biased data. The key is human oversight and continuous monitoring.

Elise Lakey

I’ve started using the "consider-the-opposite" trick before replying to heated comments. It’s wild how often I catch myself assuming the worst about someone’s intent-only to realize they just didn’t phrase it well. Small pause, big difference.